December 07, 2016 ( last updated : December 07, 2016 )

messaging

integration

iot

https://github.com/alainpham/amq-broker-network

Many organisations are now facing a challenge when it comes to choosing and setting up the right messaging infrastructure. It must be able to scale and handle massive parallel connections. This challenge often emerges with IoT & Big Data projects where a massive number of sensors are potentially connected to produce messages that need to be processed and stored.

This post explains how to address this challenge using the concept of network brokers in JBoss Active MQ.

An important thing to bare in mind when speaking of scaling the messaging infrastructure is that one must consider 2 distinct capabilitiesAt the time of writing the uses cases in this post have been tested with JBoss Active MQ 6.3.0

Choosing the right broker network topology is a crucial step. There are many examples of network topology that can be found in the official JBoss ActiveMQ documentation : https://access.redhat.com/documentation/en/red-hat-jboss-a-mq/6.3/paged/using-networks-of-brokers/chapter-6-network-topologies

To have a better understanding, we need to have the following concepts in mind. Each broker can have :

In this article we will take the example of an IoT use case where the main focus is on :

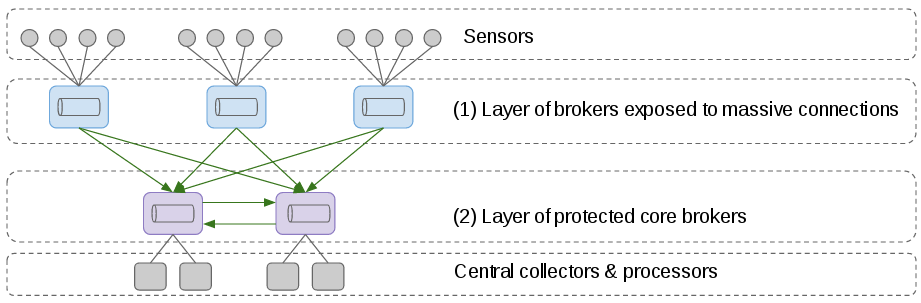

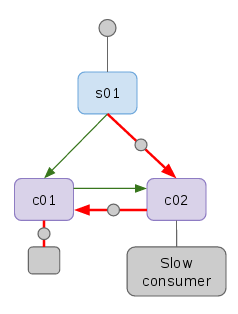

In such scenario, we would like to set up a concentrator topology as shown below.

This topology allows us to setup stable and controlled connections between the brokers of layer 1 towards layer 2. The central brokers do not have to deal with sensors connecting and disconnecting. The green arrows define a network connector. Note that these have a direction. It means that brokers from layer 1 can forward messages to all brokers from layer 2. We also allow messages to travel between brokers of layer 2. We will see later that this allows the network to better balance the workload and to facilitates scale ups/scale downs.

Usually when scaling up, we want the clients already connected to be redistributed among the newly available brokers. This is especially important for the clients connected to the layer 2 as we might not want to statically bind them to one broker. To achieve this we can set the following options within the transport connectors of the central brokers.

<transportConnectors>

<transportConnector

name="clients"

rebalanceClusterClients="true"

updateClusterClients="true"

updateClusterClientsOnRemove="true"

updateClusterFilter="amq-c.*"

uri="tcp://localhost:61620" />

</transportConnectors>Further options on the transport connector can be found here : https://access.redhat.com/documentation/en/red-hat-jboss-a-mq/6.3/paged/connection-reference/appendix-c-server-options

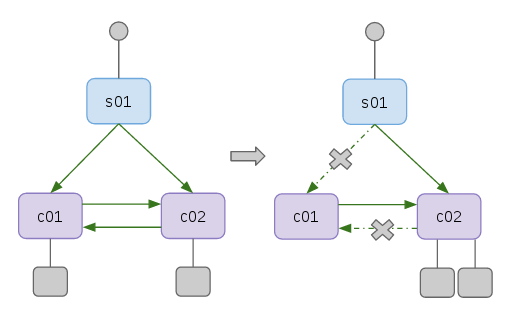

Scaling down is a bit more challenging. This is because when shutting down an instance, there might be still messages remaining in the broker. The strategy we can apply to shut down a broker (named c01 in this example) properly in a network is the following :

There might be situations where this procedure is not enough. Messages may remain when no active consumers for those exist anywhere in the network. In this case, I recommend you to look at Josh Reagan's blog post: http://joshdreagan.github.io/2016/08/22/decommissioning_jboss_a-mq_brokers/. It describes how to read the message store to be decommissioned and forward all remaining messages.

If you run the tests that are provided in the sample code (see below). You might notice that sometimes a message that reaches a broker in layer 2 is forwarded to its neighbor on layer 2 instead of being consumed by the local client.

This is a very powerful feature of Active MQ that allows messages to be consumed as fast as possible within the network.

This usually happens when the local consumer is slowing down. A good messaging system is one that tends to contain 0 messages (all messages are consumed as soon as they are produced). So what we can observe here is that the network of brokers is doing it's best to move the messages around so that they are consumed as fast as possible.

On the other hand though, the consequences of this is that messages might get stuck at some point. There is a rule saying that a message cannot go back to a broker that has already been visited. So imagine if the fast consumer suddenly disconnects, our message here will get stuck in c01.

If you run the tests that are provided in the sample code (see below). You might notice that sometimes a message that reaches a broker in layer 2 is forwarded to its neighbor on layer 2 instead of being consumed by the local client.

This is a very powerful feature of Active MQ that allows messages to be consumed as fast as possible within the network.

This usually happens when the local consumer is slowing down. A good messaging system is one that tends to contain 0 messages (all messages are consumed as soon as they are produced). So what we can observe here is that the network of brokers is doing it's best to move the messages around so that they are consumed as fast as possible.

On the other hand though, the consequences of this is that messages might get stuck at some point. There is a rule saying that a message cannot go back to a broker that has already been visited. So imagine if the fast consumer suddenly disconnects, our message here will get stuck in c01.

<policyEntry queue="sensor.events" queuePrefetch="1">

<networkBridgeFilterFactory>

<conditionalNetworkBridgeFilterFactory

replayDelay="1000" replayWhenNoConsumers="true" />

</networkBridgeFilterFactory>

</policyEntry>

mvn test

[ main] TestAMQNetwork INFO Received on collector01 500 [ main] TestAMQNetwork INFO Received on collector02 500 [ main] TestAMQNetwork INFO Total received 1000 [ main] TestAMQNetwork INFO amqs01 enqueue 1000 [ main] TestAMQNetwork INFO amqs01 dequeue 1000 [ main] TestAMQNetwork INFO amqs01 dispatch 1000 [ main] TestAMQNetwork INFO amqc01master enqueue 501 [ main] TestAMQNetwork INFO amqc01master dequeue 501 [ main] TestAMQNetwork INFO amqc01master dispatch 501 [ main] TestAMQNetwork INFO amqc02master enqueue 501 [ main] TestAMQNetwork INFO amqc02master dequeue 501 [ main] TestAMQNetwork INFO amqc02master dispatch 501

############### before scaleDown ######################### [ main] TestAMQNetwork INFO ################################### [ main] TestAMQNetwork INFO ################################### [ main] TestAMQNetwork INFO Received on collector01 250 [ main] TestAMQNetwork INFO Received on collector02 250 [ main] TestAMQNetwork INFO Total received 500 [ main] TestAMQNetwork INFO amqs01 enqueue 500 [ main] TestAMQNetwork INFO amqs01 dequeue 500 [ main] TestAMQNetwork INFO amqs01 dispatch 500 [ main] TestAMQNetwork INFO amqc01master enqueue 250 [ main] TestAMQNetwork INFO amqc01master dequeue 250 [ main] TestAMQNetwork INFO amqc01master dispatch 250 [ main] TestAMQNetwork INFO amqc02master enqueue 250 [ main] TestAMQNetwork INFO amqc02master dequeue 250 [ main] TestAMQNetwork INFO amqc02master dispatch 250

############### scaleDown ######################### [ main] TestAMQNetwork INFO ############################## [ main] TestAMQNetwork INFO ############################## [ main] TestAMQNetwork INFO Received on collector01 501 [ main] TestAMQNetwork INFO Received on collector02 499 [ main] TestAMQNetwork INFO Total received 1000 [ main] TestAMQNetwork INFO amqs01 enqueue 1000 [ main] TestAMQNetwork INFO amqs01 dequeue 1000 [ main] TestAMQNetwork INFO amqs01 dispatch 1000 [ main] TestAMQNetwork INFO amqc01master enqueue 250 [ main] TestAMQNetwork INFO amqc01master dequeue 250 [ main] TestAMQNetwork INFO amqc01master dispatch 250 [ main] TestAMQNetwork INFO amqc02master enqueue 750 [ main] TestAMQNetwork INFO amqc02master dequeue 750 [ main] TestAMQNetwork INFO amqc02master dispatch 750

############### before scaleUp ######################### [ main] TestAMQNetwork INFO Received on collector01 250 [ main] TestAMQNetwork INFO Received on collector02 250 [ main] TestAMQNetwork INFO Total received 500 [ main] TestAMQNetwork INFO amqs01 enqueue 500 [ main] TestAMQNetwork INFO amqs01 dequeue 500 [ main] TestAMQNetwork INFO amqs01 dispatch 500 [ main] TestAMQNetwork INFO amqc01master enqueue 0 [ main] TestAMQNetwork INFO amqc01master dequeue 0 [ main] TestAMQNetwork INFO amqc01master dispatch 0 [ main] TestAMQNetwork INFO amqc02master enqueue 500 [ main] TestAMQNetwork INFO amqc02master dequeue 500 [ main] TestAMQNetwork INFO amqc02master dispatch 500

############### scaleUp ######################### [ main] TestAMQNetwork INFO Received on collector01 500 [ main] TestAMQNetwork INFO Received on collector02 500 [ main] TestAMQNetwork INFO Total received 1000 [ main] TestAMQNetwork INFO amqs01 enqueue 1000 [ main] TestAMQNetwork INFO amqs01 dequeue 1000 [ main] TestAMQNetwork INFO amqs01 dispatch 1000 [ main] TestAMQNetwork INFO amqc01master enqueue 252 [ main] TestAMQNetwork INFO amqc01master dequeue 252 [ main] TestAMQNetwork INFO amqc01master dispatch 252 [ main] TestAMQNetwork INFO amqc02master enqueue 752 [ main] TestAMQNetwork INFO amqc02master dequeue 752 [ main] TestAMQNetwork INFO amqc02master dispatch 752Thanks for reading !

Originally published December 07, 2016

Latest update December 07, 2016

Related posts :